There are many ways to transfer your tables data to Bigquery from SQL server. Each of them can have it’s advantages and disadvantages. Exporting to a CSV is simple but sometimes not very robust in case your data contains delimiter or newline character then BigQuery will fail to import that CSV file due to parsing error.

Here, I am going to explain how to transfer data from SQL server to BigQuery in the arguably most robust way using JDBC connection. You don’t need to care about any delimiter or newline character stuff. You will need just below mapping of data type from SQL server to Bigquery to create the schema in your BQ dataset, accordingly.

SQL Server To BigQuery Data Type Mapping

- nvarchar, varchar, char will map to STRING

- datetime, date will map to either INTEGER (UNIXMILLIS)

- int, smallint, bigint will map to INTEGER

- bit will map to BOOLEAN

- numeric will map to NUMERIC

Download JDBC driver from Microsoft Website: https://docs.microsoft.com/en-us/sql/connect/jdbc/download-microsoft-jdbc-driver-for-sql-server?view=sql-server-ver15

Extract the downloaded zip or tar.gz file and upload all the jar files to a cloud storage location. This path we will need to provide as a parameter in our job configuration, soon.

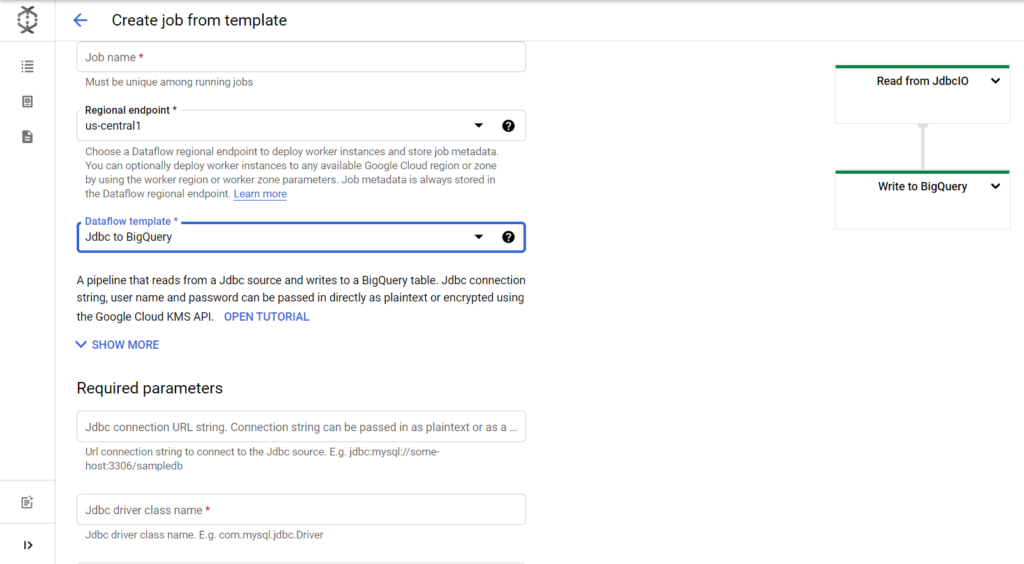

Job name: The name of job which you want to use to identify the run. Can be anything you want.

Regional Endpoint: GCP location where workers will be deployed.

Dataflow template: JDBC to Bigquery

Jdbc connection URL: jdbc:sqlserver://server_ip_address;databaseName=your_database;user=yourusername;password=yourpassword

Jdbc driver class name: com.microsoft.sqlserver.jdbc.SQLServerDriver

Jdbc source SQL query: SELECT * FROM YOUR_TABLE

BigQuery output table: gcp_project_id:your_bq_dataset.your_table_name

GCS paths for Jdbc drivers: gs://yourbucket/path_to_driver_jar/mssql-jdbc-9.2.1.jre8.jar,gs://yourbucket/path_to_driver_jar/mssql-jdbc-9.2.1.jre11.jar

Temporary directory for BigQuery loading process: gs://ma-da-sales-demo/jdbc/temp

Temporary location: gs://ma-da-sales-demo/jdbc/temp_bq

Worker IP Address Configuration: Public

Subnetwork: regions/us-central1/subnetworks/default

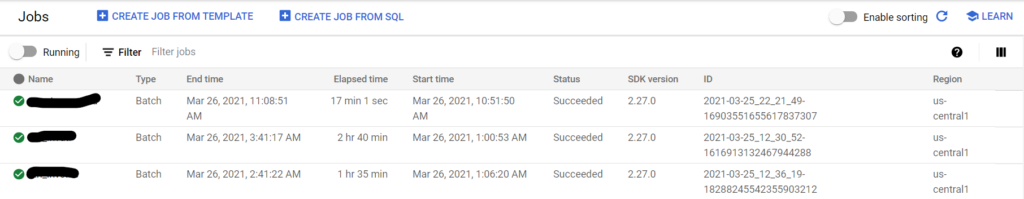

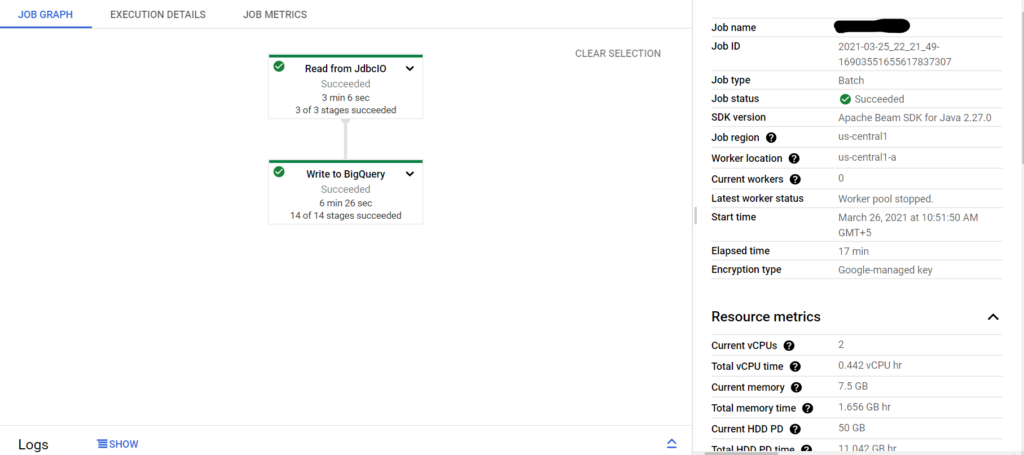

After you have filled the above parameters, just hit the submit button and your data transfer will be started. You can monitor the progress in the dataflow dashboard as below.

Note:

- The data will be added in APPEND mode to Bigquery.

- This method can be used to transfer data from any database which supports JDBC connections. You just need to download corresponding JDBC driver and provide their GCS path in the job configuration.